Models with confidence

Artificial intelligence (AI) has been used in a multitude of different sectors over the past decade, and it is increasingly used as a tool to optimize and automate industrial processes and tasks. Examples of applications include quality assurance in terms of production fluctuations, “smart factory” and predictive maintenance, “smart farming”, models for prognosis for epidemics, assisted pathology, as well as image analysis and biometrics. There is now no doubt that we can look into a future where KI and machine learning-based algorithms will become an increasingly integrated part of human society and everyday life.

However, it is important to remember that an AI still simply consists of algorithms performed by a computer – algorithms that (with a few exceptions) can neither make risk assessments nor understand any. consequences of bad choices. In some cases, wrong decisions can be catastrophic, such as in 2016 when a passenger in a self-driving car died, after the car’s autonomous system misclassified a white trailer as being part of the sky (NHTSA, 2017). Or in 2020 when an innocent man was accused of a crime due to an error committed by a face recognition system (Hill, 2020). At www.incidentdatabase.ai/ there are overviews of (probably only a very small part of) the accidents that have occurred due to decisions made by KI systems.

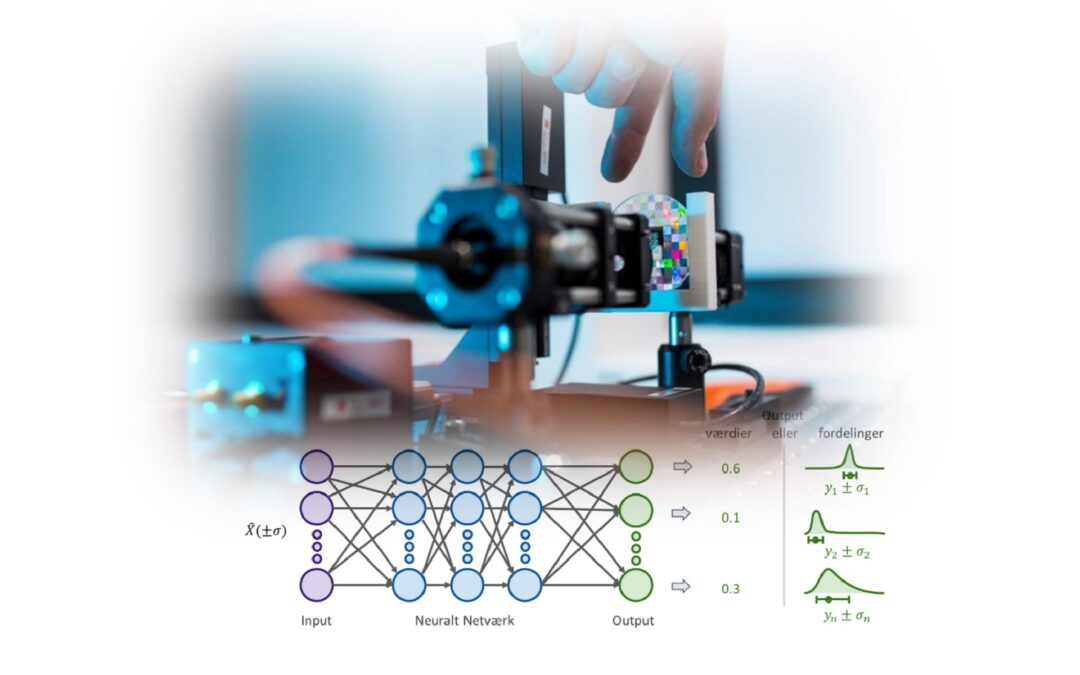

A very fundamental mistake in many KI models is their inability to answer: “Thank you for asking, but I do not know”. As humans, we have a completely natural ability (cognitive sense) to know when we are in doubt about something and when we know something. KI models, unfortunately, do not have quite the same sense of when they are safe and when they are unsafe, and to the very central question of whether “KI models know what they do not know”, the answer is often no. . This is especially the case when a model suddenly has to decide on a new data set where there has been no similar precedent. Instead of answering something randomly, it would be appropriate if the model gave an answer that was then fraught with a very great deal of uncertainty.

You can read the full report here.